Helm.ai Announces Level 3 Urban Perception System With ISO 26262 Components

Helm.ai Announces Level 3 Urban Perception System With ISO 26262 Components

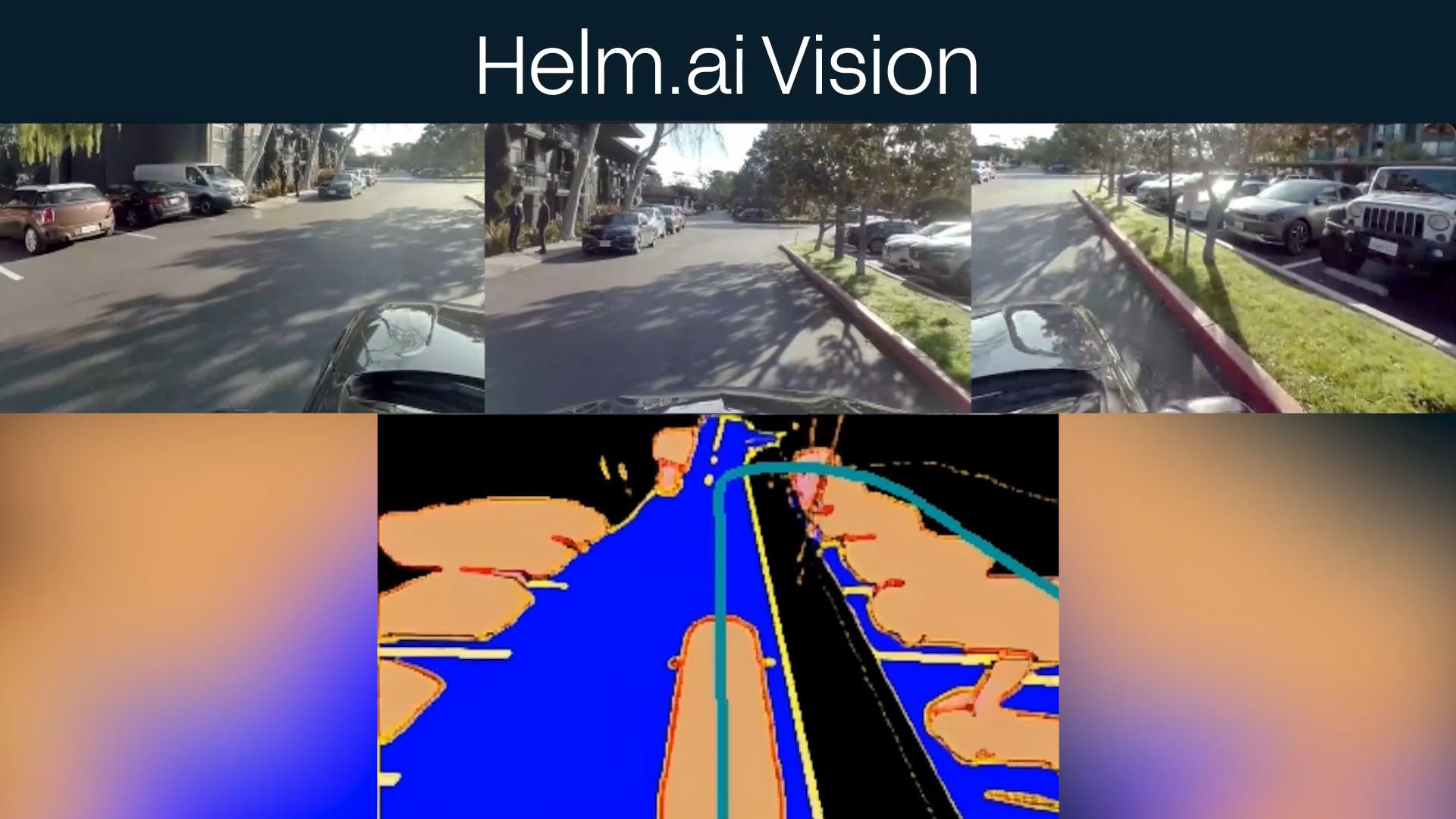

REDWOOD CITY, Calif.--(BUSINESS WIRE)--Helm.ai, a leading provider of advanced AI software for high-end ADAS, autonomous driving, and robotics automation, today announced Helm.ai Vision, a production-grade urban perception system designed for Level 2+ and Level 3 autonomous driving in mass-market vehicles. Helm.ai Vision delivers accurate, reliable, and comprehensive perception, providing automakers with a scalable and cost-effective solution for urban driving.

Assessed by UL Solutions, Helm.ai has achieved ASPICE Capability Level 2 for its engineering processes and has been certified to meet ISO 26262 ASIL-B(D) requirements for components of its perception system delivered as Software Safety Elements out of Context (SEooC) for Level 2+ systems. The ASIL-B(D) certification confirms that these SEooC components can be integrated into production-grade vehicle systems as outlined in the safety manual, while ASPICE Level 2 reflects Helm.ai’s structured and controlled software development practices.

Built using Helm.ai’s proprietary Deep Teaching™ technology, Helm.ai Vision delivers advanced surround view perception that alleviates the need for HD maps and Lidar sensors for up to Level 2+ systems, and enables up to Level 3 autonomous driving. Deep Teaching™ uses large-scale unsupervised learning from real-world driving data, reducing reliance on costly, manually labeled datasets. The system handles the complexities of urban driving across several international regions, including dense traffic, varied road geometries, and complex pedestrian and vehicle behavior. It performs real-time 3D object detection, full-scene semantic segmentation, and multi-camera surround-view fusion, enabling the self-driving vehicle to interpret its surroundings with high precision.

Additionally, Helm.ai Vision generates a bird’s-eye view (BEV) representation by fusing multi-camera input into a unified spatial map. This BEV representation is critical for improving the downstream performance of the intent prediction and planning modules.

Helm.ai Vision is modular by design and is optimized for deployment on leading automotive hardware platforms, including Nvidia, Qualcomm, Texas Instruments, and Ambarella. Importantly, since Helm.ai Vision has already been validated for mass production and is fully compatible with the end-to-end (E2E) Helm.ai Driver path planning stack, it enables reduced validation effort and increased interpretability to streamline production deployments of full stack AI software.

“Robust urban perception, which culminates in the BEV fusion task, is the gatekeeper of advanced autonomy,” said Vladislav Voroninski, CEO and founder of Helm.ai. “Helm.ai Vision addresses the full spectrum of perception tasks required for high end Level 2+ and Level 3 autonomous driving on production-grade embedded systems, enabling automakers to deploy a vision-first solution with high accuracy and low latency. Starting with Helm.ai Vision, our modular approach to the autonomy stack substantially reduces validation effort and increases interpretability, making it uniquely suited for nearterm mass market production deployment in software defined vehicles.”

About Helm.ai

Helm.ai develops next-generation AI software for ADAS, autonomous driving, and robotics automation. Founded in 2016 and headquartered in Redwood City, CA, the company reimagines AI software development to make scalable autonomous driving a reality. Helm.ai offers full-stack real-time AI solutions, including deep neural networks for highway and urban driving, end-to-end autonomous systems, and development and validation tools powered by Deep Teaching™ and generative AI. The company collaborates with global automakers on production-bound projects. For more information on Helm.ai, including products, SDK, and career opportunities, visit https://helm.ai.

Contacts

Media contact

press@helm.ai